share this post on

19 August, 2019

The future-shaping power of new technologies

In this series of articles, we focus on four key technologies that are changing the face of the financial industry today. In the upcoming weeks, we are going to devote a separate blog post to each of the following topics that we consider the most influential technologies today in financial services.

1. The tech behind Open Banking and PSD2

2. Microservices and Containerisation

3. Big Data

4. Artificial Intelligence and Machine Learning

There is no denying that the future in financial services will belong to those who understand the tech behind it. This guide will help you to make sure you have a crystal clear picture. In this 2nd part, we will cover how microservices and containerisation shape the future of finance.

2. Microservices and Containerisation

Imagine transferring €1000 to a friend with a banking app. Now imagine how it feels when that app takes minutes to load. Not good. Maybe next time you’d rather pay with PayPal — and that is not something that’s going to make a bank happy. A recent survey of financial institutions found that about…

...85 per cent consider their core technology to be too rigid and slow. Consequently, about 80 per cent are expected to replace their core banking systems within the next five years.

These core systems are usually monolith backends (we’ll get to that later), sometimes still running on mainframes! (Btw, mainframes are specialized hardware for running a specific software).

These days, with new fintech startups cropping up every day, releasing new features every month, we can’t accept taking years to develop new core banking features. Truth be told, these old systems are written in programming languages that are almost extinct (read about COBOL on a museum’s website), making it incredibly hard and expensive to assemble a development team that can do the job. As a result, your teams cannot react fast enough to the growing number of financial transactions and changing customer needs.

You can do two things in this situation:

- “Simply” — through blood and sweat — replace the old core system with a new one;

- Replace certain parts or functionalities one by one with microservices.

The first solution is tricky. Moving to a new core, migrating all the data while preserving business continuity is very risky. We’ve seen some banks introducing new cores from close up. It’s nasty. It can result in multiple days of downtime and embarrassing bugs after restart. Your customers will not like it. And for some big players that’s way too much risk.

Here the second solution comes into play. Instead of tearing down everything, we start replacing core functionalities with microservices. One by one.

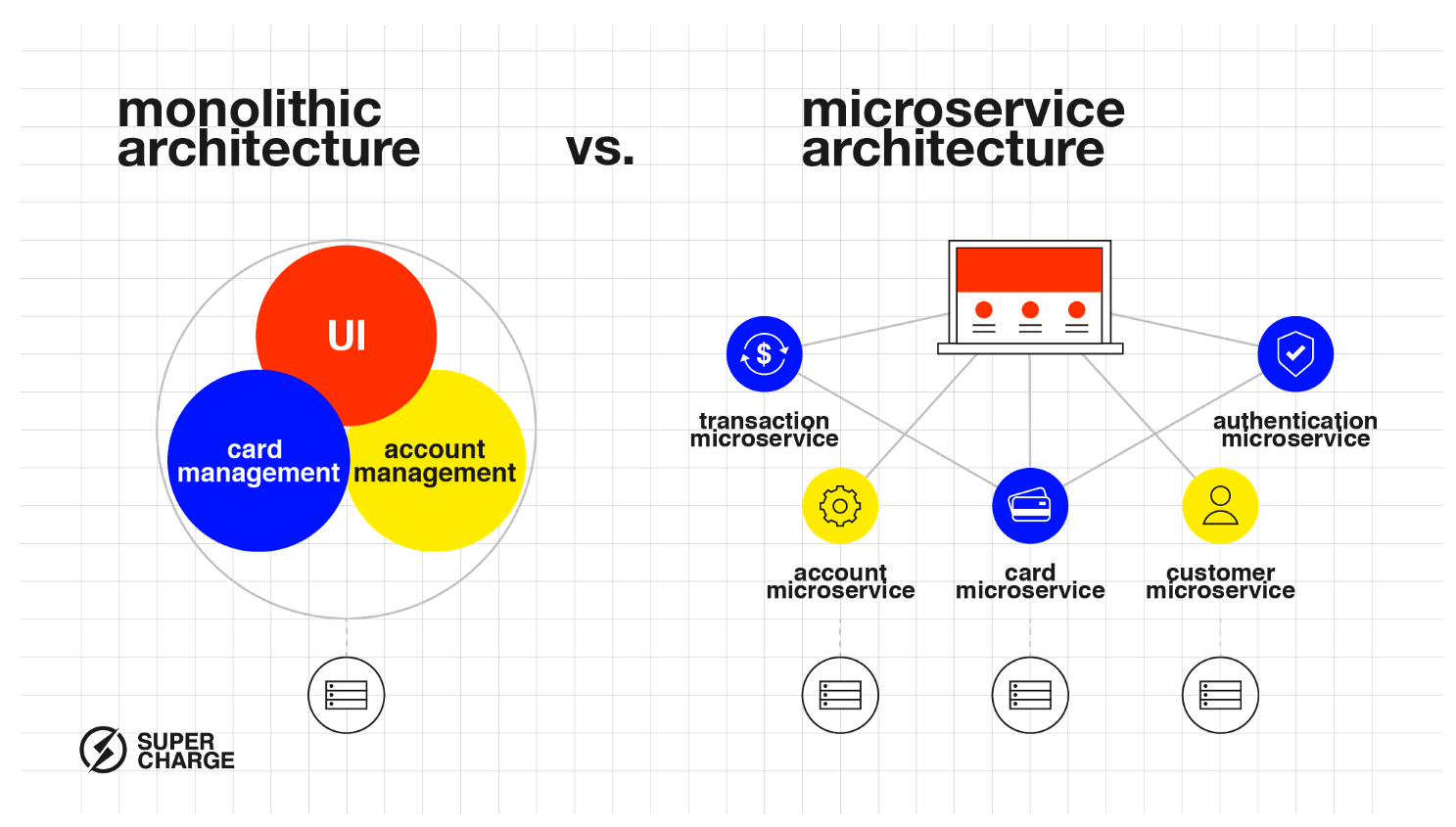

Monolith architecture vs. microservices

Figure 1: How the monolithic and microservice architectures differ

A monolith means that every service a software provides runs within the same application.

Imagine a core banking system where authentication, customers, accounts, transactions and payments are all handled by one application connected to one huge database. Should something happen with the database connection, or should the application crash, everything can go down. The customer will record no transaction history and will be very upset at the inability to initiate payments.

Another common problem with this architecture is that if the demand for certain services grows, we are largely unable to scale only that part of the application. We have to scale everything which results in slow, painful and costly projects. And in the end, we might not even solve the real issues.

Microservice architecture comes to the rescue! Every service — accounts, transactions, etc.. — runs as a standalone, separate entity, creating a loosely coupled system. There can be communication between each service and they can rely on each other, but they are run separately, connecting to separate databases. This allows us to scale them one by one when loads grow. Think about it. Should one of our services go down, this is a much better scenario.

All of the other services — which do not depend on the failing service — can keep working properly. Another great advantage is that every microservice can be implemented with the technology that’s best suited for the given use-case, using an optimised storage structure and optimised processing solution. Separate teams can work on the microservices, implementing a well-defined, bounded set of functionalities.

Microservice infrastructure — Containerisation and Orchestration

As always, magic comes with a price. More resources have to be put into the infrastructure, DevOps side in exchange for increased flexibility, scalability and resilience. There can be hundreds of applications deployed and running independently in a microservice architecture. This results in increased in-application operation responsibilities.

Normally there are four parts to offering banking apps. A server (1) runs the operating system (2) that runs the application server (3) that runs our application (4). We have to make sure that these 4 participants are always compatible with each other. When we use only 1 monolith application this isn’t a big deal. But imagine when we have to make sure that hundreds of microservices with dozens of application servers are still compatible with the operating system and every other dependency. This would be a nightmare. You could lose a lot of sleep.

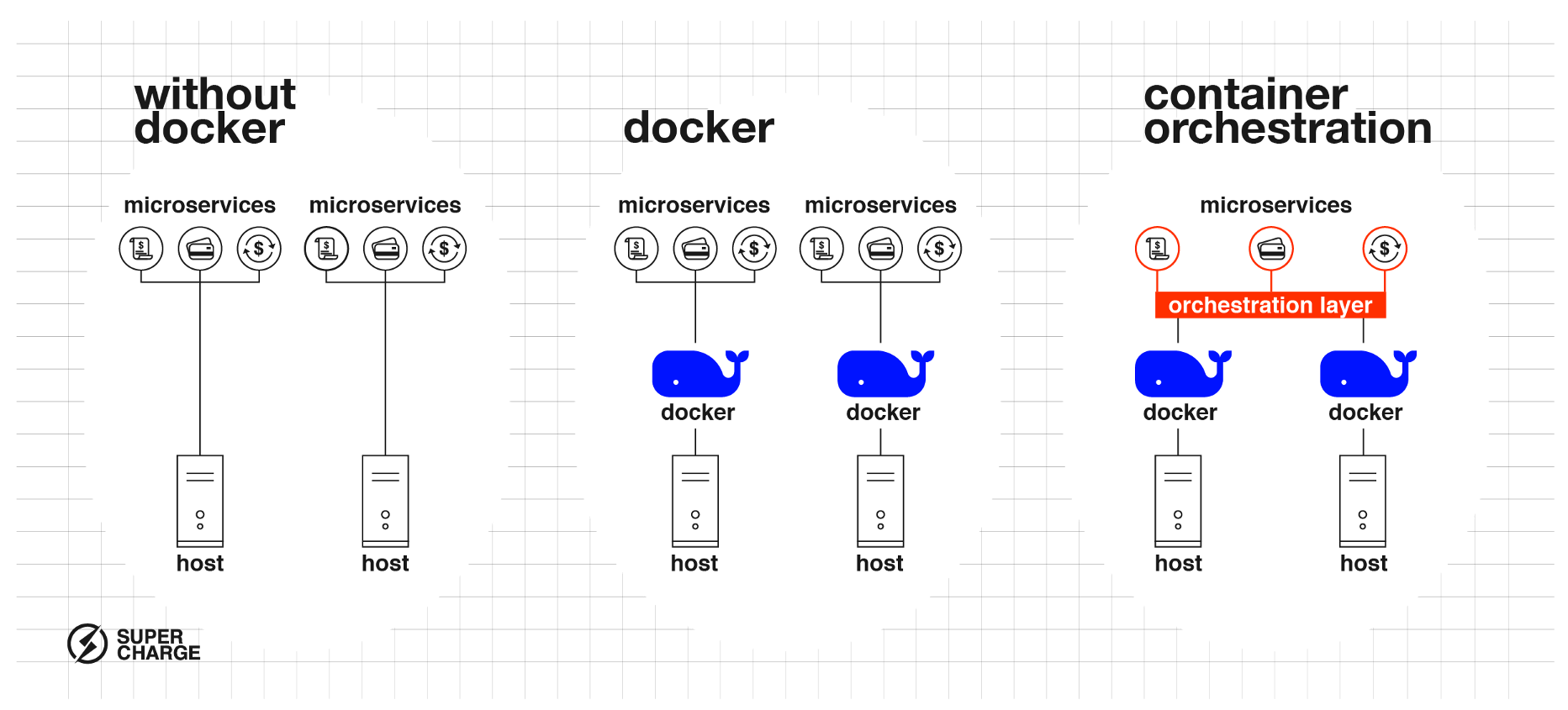

Figure 2: Container orchestration

Docker containers are designed to solve this issue. All your application developer needs to do is package the microservices inside a Docker container and then hand it over to the DevOps team. They will make sure works just fine. All the DevOps team needs to do is support running Docker containers, which will ensure that everything will be compatible. Developers can write the microservices in any programming language of their choice, such as Java Node.JS or Scala. Until they package it into a Docker Container the microservices will be able to run on the infrastructure.

Docker containers are a great tool to run your applications in a very flexible way. However, they cannot solve the scaling issue on their own. When we need to run 100 microservices, 4 instances each on 20 servers, well, to put it lightly, it can be a challenge.

It means starting and stopping 800 apps, observing which are running how much, and switching around servers endlessly. Sounds ineffective, doesn’t it? This is where Container Orchestrations, such as Kubernetes, OpenShift or Docker Swarm can help. It removes the burden of starting, stopping and moving the microservices to new servers. All we need to do is state how many instances we need from the microservices. The Container Orchestration takes care of running them and balancing them between the servers. This gives us a very powerful setup to manage scalability and reliability issues.

The new infrastructure makes it possible to easily introduce new features and new services without risking the stability of the system as a whole.

Conclusion

Microservices, containers and orchestration enable us to introduce cutting-edge new financial services next to old core systems, extract functionalities one-by-one and make them scalable and more reliable. This not only gives us a safer way to replace old core systems but also introduces state-of-the-art technologies that give us the opportunity to respond to customer needs in a truly agile way. Wait four minutes for your app to upload a €1000 transfer? By using Microservices and Containerisation, your customer will never need to wait long.